My personal performance #30daysoftesting challenge days 10 to 19

What time is it? Game time! Well, almost, it’s time for the latest #30daysoftesting challenge. Again organized by ministry of testing, this time with some support of the PerfGuild. Which leads us to the topic of this challenge: performance testing. Instead of posting a blogpost afterwards, I decided to start one right from the beginning and keep on updating it while doing the challenge. And as things are getting rather long, I decided to split the one post into 3 smaller ones.

My personal performance #30daysoftesting challenge days 1-9

My personal performance #30daysoftesting challenge days 10-19 (this one)

My personal performance #30daysoftesting challenge days 20-31

Day 10: Explore the difference between load testing and stress testing

stress testing vs. load testing summed up: https://t.co/bQ0sbLvtf4 #30daysoftesting #day10

— Christian Kram (@chr_kram) 10. Juli 2017

Finally I was able to get a Spinal Tap reference somewhere in here. But seriously, I had an innate idea of the differences and tried to verify or falsify that by consulting different sources. The first and easy choice was wikipedia. Interestingly the German version provides a circular references, which wasn’t really much of a help:

German @wikipedia says “Lasttest” comes from English https://t.co/mO6YUTXBS6 on English version & you’ll get to stress test #30daysoftesting

— Christian Kram (@chr_kram) 10. Juli 2017

So I turned to the book I chose for day 1:

No clearcut definition, either, though some references to stress testing involving pushing a system over a certain threshold, be it concurrent access or common access over a prolonged period of time. That pretty much goes along with my innate definition: A stress test exposes a system to an unusual load beyond its limits, while a load tests exposes a system to defined load to see how it reacts, thus trying to identify its limits.

Day 11: Inspect and document the differences between your production database and your test database

This one is actually a tough one as it makes the presumption that there is something like a solitary production database.

The nice thing about working for an ERP software company is that there isn’t. We support several DBMS and of course the system we set up inhouse is pretty different from what customers use out there. Which is not much of surprise if your customers are all in the government sector while you are a software company. So what we have done is to have several test database which prototypical resemble what our customers use and what we cleared our software for being used on.

Day 12: Design a performance test for your most visited site or called API

I skipped this one as I attended my companies user congress to give a little talk on our testing activities and co-host a workshop on how several customers might join forces for their acceptance tests.

Awesome location for the @MACH_AG User Congress #anwenderkongress #würzburg pic.twitter.com/DCRYM0IhsE

— Christian Kram (@chr_kram) 12. Juli 2017

Day 13: Share a photo of your applications CPU utilization on production

see Day 12. And Day 11 as well. Our internal production system is not really representative of what our customers have installed, so it is not that meaningful.

Day 14: Install an open source performance testing tool and familiarize yourself with it

I would dare to give myself half a check on this one as I tried to get an overview of what tools are out there and decided that instead of jumping just into one that I want to have some kind of ideas at hand at what I want to be looking for while familiarizing myself with the tool. That especially concerns if and how I would be able to use the tool in my working environment. Well, what can I say? I haven’t done it yet. But the three tools that I consider looking at are (in order of likelyhood):

Day 15: Watch and then share a video on performance testing

While browsing youtube for performance testing, this was the first video I simply had to watch (once a baller, always a baller):

While it officially fulfils the criterion of performance testing, I guess it’s about software performance testing. I decided to go for this one since the title suggests that it’s about the management aspect, something I want to get to know more about (at the moment of writing this I only have watched the first 15min, shame on me):

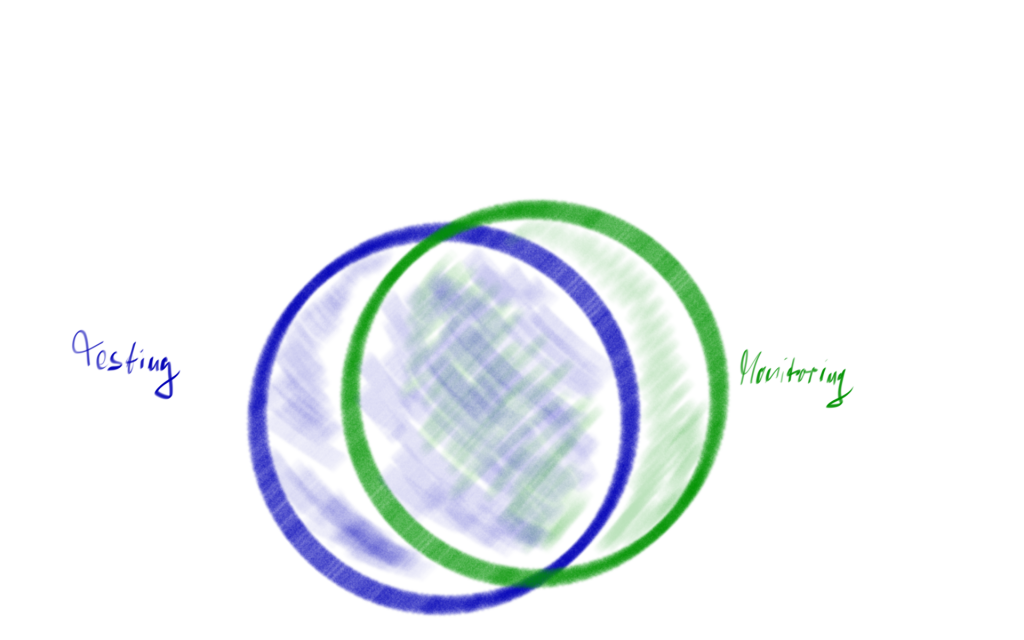

Day 16: Compare and contrast performance testing and monitoring

My understanding is that these two share some common ground and that you need monitoring to do performance testing. You could say that monitoring deals with observing a system, while performance testing is about putting the system into a desired state. Monitoring can thus go on its own, while testing probably shouldn’t.

| Aspect | Testing | Monitoring |

|---|---|---|

| Gathering Information | + | + |

| Defined environment | + | o |

| Defined load | + | - |

| Live user behaviour | o | + |

| Active Testing | + | - |

| To be interpreted | + | + |

Both are about gathering information about a system in relation to a certain load or performance. Testing should be done in a defined environment to ease comparison, while monitoring doesn’t necessarily need to be, although it somewhat is just by the matter of the system it is monitoring (does that somehow make sense to anybody else? 😉 Testing provides a given to load to see what is going on, monitoring simply shows what is going on regardless of the load, which may vary. Especially if you use monitoring in production. Here you have real user behaviour, while testing tries to simulate that. Of course both need to be interpreted.

Day 17: Explore how easy it would be for you to create data for a 10,000 user performance test on your application

I skipped on this one for now, but figured that creating the data in terms of manipulating the database to include 10.000 of bills and receipts wouldn’t be that much of a problem. The tricky part at the moment would rather be to get concurrent usage for the test itself.

Day 18: Research Workload Models and share your findings

Does it make me old when my first thought about this was “that’s like those waveforms used for making sound on the c64″? Coincidentally I read about that topic in my day 1 book today, when it dealt with thinking beforehand about how many virtual users should be used at what point of time. And it seems I wasn’t the only one not familiar with the concept: Ali Hill put up a nice summary here.

Day 19: Use a proxy tool to monitor the traffic from a web application

I have been fiddling around with fiddler (oh, come on, you knew, that this lame pun was about to come). Well, fiddling is probably the right word, as I didn’t do much more than installing it (which wasn’t all that smooth actually) and playing around a bit, trying to grasp the basic concept by getting through the “observe traffic” chapter of the manual, which is actually quite a good one.